I haven’t forgotten about writing the next post on the issue of representations — it might take some time but ultimately I will get there!

The 2024 Nobel Prize in Physics, awarded to John Hopfield and Geoffrey Hinton, triggered some interest in the question of the relations of neural network research to, and within, other fields. There were long debates on twitter etc of whether this “counts” as physics (as well as the somewhat-expected endless arguing around credit and recognition).

I’ve avoided taking part in the debates in real time, because I didn’t think there would be any productive outcome to it. Instead, I want today to share some compressed view about the larger question — trying to track, in broad strokes, the developments in neural network modelling. Hopefully this could give some context that is a bit broader than what is typically offered in popular presentations of this topic.

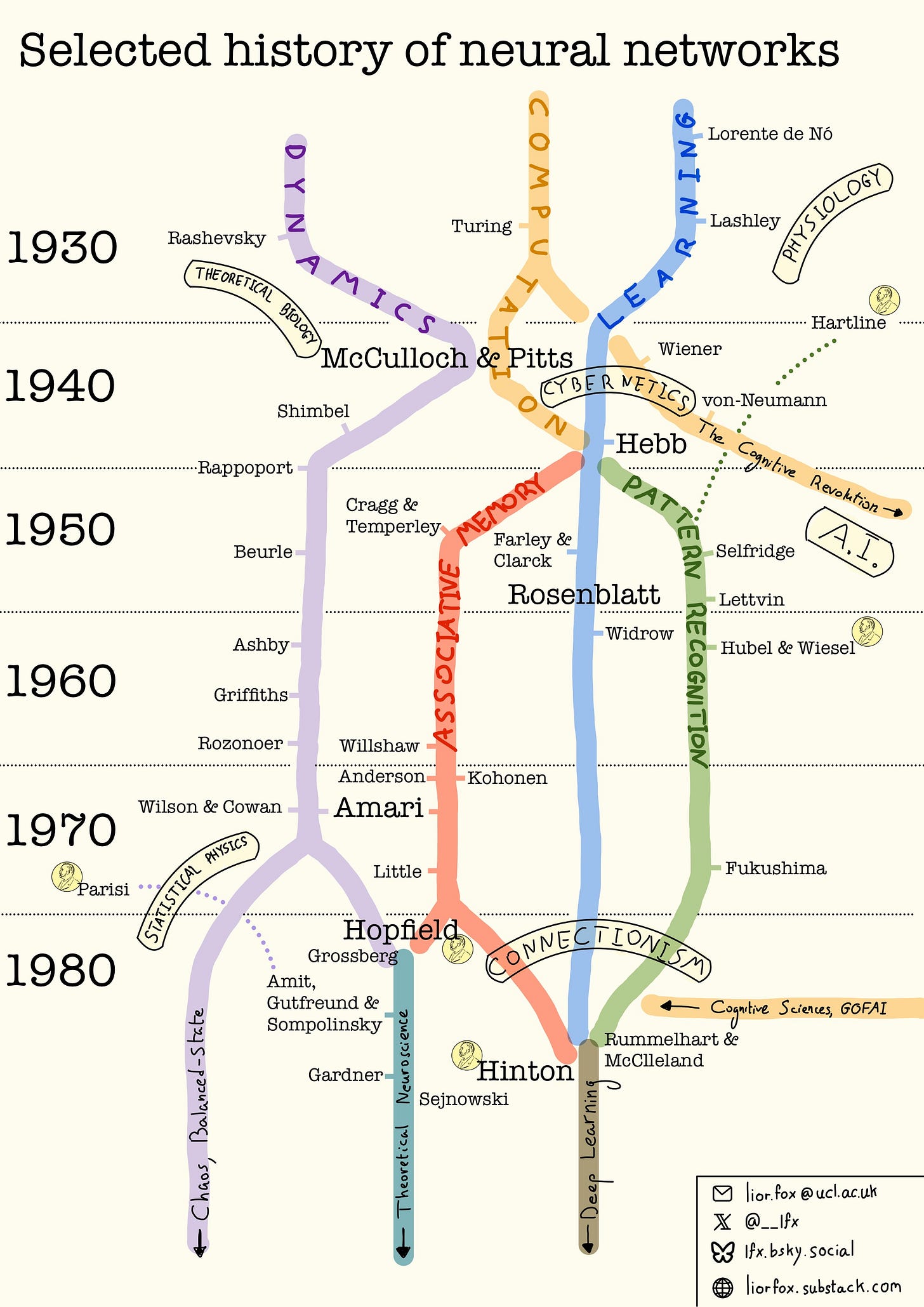

Rather than presenting this textually, I have worked over the past few weeks on creating some kind of a visual map, highlighting a “selected history” of neural networks. Arranging the information visually helps in making the interactions, splits, and influences among different “trajectories” pop-out. The timeline structure also helps in appreciating how long some of this history is, and how back far back some of the foundational ideas or concepts go:

The “price” of the visual format is that the information is somewhat minimal. A written text could have expanded in a few words on each element, providing the explicit reference to the paper, and explain what were the major question/development that it helped addressing. This would also put more emphasis on the scientific content and questions, as opposed to just the names. The information does exist, because the graphics was created by largely following a short chapter that co-authors and I have written. It will one day be published as part of the introduction chapter to our book, but for now I can’t share the full text here. As a half-way solution, I’ve created a clickable version of the graphics, where each name links to the relevant resource. I have tried to mostly link to the original works (mostly papers, some books), but inevitably some small number of links are to retrospect reviews of the person/work. The interactive/clickable version can be found here.

As such lists go, selection is always subjective, reflecting personal interest and understanding. This list is no exception. It is a selected history, and is not intended to be an exhaustive or authoritative account of the entire fields of computational neuroscience, or AI, or even of “Neural Networks” (to the extent that there is such a field of its own). Rather, it aims to track the history and development of ideas and research agendas in broad strokes, mainly along the two themes of computation and dynamics.

Moreover, I’ve intentionally stopped at the 1980’s, for two major reasons (and a third minor reason). First, I believe that the history since 1980’s is more-or-less widely known.1 Second, the research “trajectories” that were characterizing the (part of) this field until the 1980’s might not be the most relevant trajectories/axes of developments since. In some sense, such summarized map can only be made retrospectively with some historical context (that we still don’t fully have), if I don’t want it to be completely drowned by all recent developments. The third reason is that the closer this gets to the present moment, the larger the chances are to mistakenly offend someone who’s still alive and working by not including them.

I might keep updating the map in the future (and of course, I would appreciate feedback/comments/criticism). In any case, taking some time to think about how to present this information graphically has been a fun exercise.

Obviously this is a false belief, as so much of Deep Learning as a field has been devoted to the re-discovering and re-branding of ideas from the 80’s and 90’s…